Evaluating WAF solutions

The evaluation of WAF solutions allows to increase the effectiveness in detecting and blocking advanced threats.

Introduction

The evaluation of WAF solutions is one of the tasks performed by Tarlogic’s Cybersecurity laboratory.

This article describes a possible strategic approach to face this type of evaluation, which is executed from the point of view of a potential attacker. The methodology described can also be used for the analysis of other defensive products like RASP (Runtime application self-protection).

There are multiple benefits that this analysis brings. Some of them are listed below:

- Provide global and detailed metrics measuring effectiveness detection capabilities against several attack types.

- Allows a fact based choice when selecting between different types of WAF solutions for a specific application.

- Evaluate and improve resistance against bypass attacks, using a combination of automatic and manual advanced methodologies.

- Improve WAF configuration, providing extensive testing to identify possible issues caused by false positives or negatives.

- Detection of possible performance or usability issues due to the WAF, before deploying it on production environments.

- Test WAF user interface through the generation of a large number of events and alerts of different types, allowing to evaluate the detection of possible situations in which it could be necessary to take action.

Objectives and infrastructure

The most appropriate infrastructure for the test campaign is determined depending on the objectives of the analysis.

If the goal is to evaluate the characteristics of one or more WAF products independently from the context where they will be deployed, it is recommended to count with multiple deliberately insecure applications that will be protected by the solutions under test. These applications will make it easier to obtain a bypass evidence with working proofs of concept.

For this purpose, several vulnerable applications are available as open-source on public repositories. Some well-known examples are WebGoat, JavaVulnerableLab, DVWA, JuiceShop, Hackazon, BodgeIT, bWAPP, etc. The more heterogeneous the components of these applications are, the easier it will be to obtain evidence for different contexts and types of attacks.

This method is especially appropriate in the analysis of RASP solutions. While a WAF solution should always block an obvious payload like <script>alert(1)</script> with independence of whether the target resource is vulnerable to Cross-site scripting, a RASP solution could take the decision of not blocking if its run-time analysis determines that the string will not be reflected in the HTML output and, therefore, it doesn’t imply a security risk. For this reason, to correctly evaluate a RASP solution, it is needed to count with previously identified vulnerabilities.

On the other hand, when the goal is to evaluate the behavior on a specific web application that will be publicly exposed, the attacks will obviously be carried, at least, against this web application. In general, the application will not count with previously detected vulnerabilities and their identification is not part of the analysis objectives. Therefore, in this context the evidence of the possible WAF bypasses can consist of the absence of a blocking response from the WAF after sending a payload that could be considered dangerous in a hypothetical context. This means, for example, that the absence of a blocking response to a payload like <script>alert(1)</script> can be considered as enough evidence of an anomaly in the Cross-site scripting protection, even though the target endpoint is not vulnerable to this type of attacks.

Measurable characteristics

Before defining a scoring methodology that will allow us to obtain an effectiveness measure for the WAF, the characteristics that will be analyzed shall be determined. In order to do so, a best practice can be to include, at least, the ability to block attacks related to vulnerabilities that are part of the OWASP Top 10. Also, other interesting characteristics can be added for the case of study, such as the ability to detect “Fake bots”, payloads in HTTP headers, or payloads in JSON/XML contexts, in which sometimes WAF detection capabilities are less or totally absent.

It has to be taken into account that the analysis is not only useful to measure the product features from an isolated point of view, but also to identify possible problems related to the infrastructure environment in which it has been deployed. In this way, the tasks should include tests such as the ones that allow to identify global bypasses through directly connecting to the backend IP address on reverse proxy-based and cloud-based solutions, HTTP Desync Attacks, custom encoding formats which are accepted by the application’s business logic, etc.

To optimize the time and the quantity of useful information in the results report, it is important to be aware of the types of the attacks that will be discarded from the analysis. We have to assume that, because of the way a WAF works, there are attacks the product will not effectively protect from. In general, a WAF will not be able to protect, for example, from business logic-related attacks such as insecure direct object references. Sometimes, a RASP solution could be more flexible for this purpose.

Although the majority of the tests to evade the WAF will be based on creative and manual attack vectors, the importance of a previous phase with automatic tools must not be underestimated. The benefit of this phase is to detect weaknesses that can be exploited with automated tools, and therefore with high probability of occurrence due to lack of expertise and skill needed to launch these types of attacks.

Scoring methodology

A scoring methodology allows to obtain a global overview about the efficiency of the analyzed product, and also about the effectiveness of the protection for the different types of vulnerabilities. The resulting scores are useful to detect weaknesses as part of a continuous improvement cycle or to make comparisons between different solutions that can help in the decision-making process.

It is important to be aware of the relativity and subjectivity of the proposed metrics which are described in the following sections. The “basic protection” or “advanced protection” concepts, and the found bypasses may vary depending on the experience, knowledge and skills of the pentester who executes the analysis. In general, a protection will be considered as advanced if no evasions are detected, or the identified evasions require non-trivial attack vectors that include the combination of multiple techniques. Also, it must be assumed that an attacker with enough technical and time resources will always be able to detect additional issues. However, the results obtained will provide a valuable reference for the WAF effectiveness, and can be a metric to be used as part of a continuous improvement process, or they can be helpful to choose between WAF solutions or configurations.

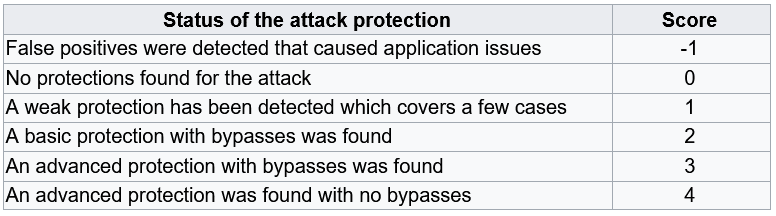

The following table defines a scoring scale to evaluate effectiveness against the different attack categories:

As can be noticed, the possible negative effects in the application functions caused by false positives will subtract points on the results.

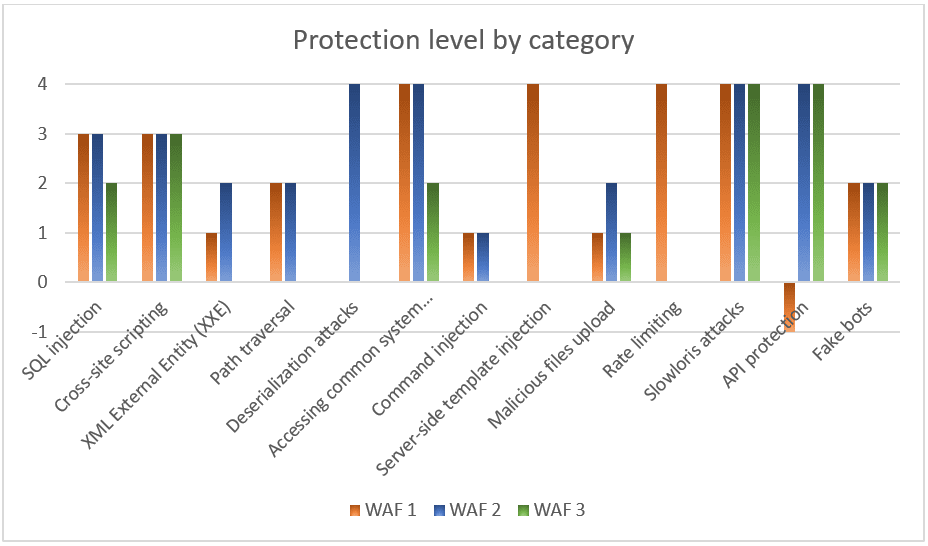

An example of using the previous scoring scale for three different WAF solutions is reported in the following diagram:

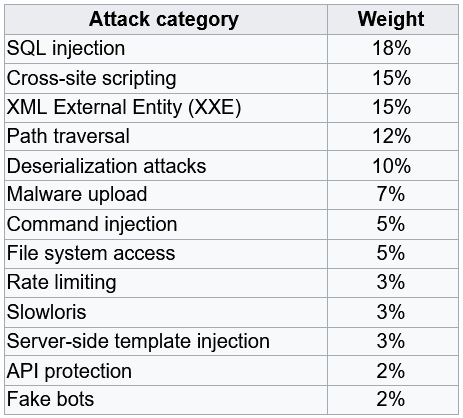

After obtaining the score for every attack category, it is possible to calculate a global score for the WAF performance. For this task, it is useful to weigh the different attack categories based on their impact. This approach allows to give more importance for certain types of attacks with respect to others, by taking into account circumstances such as the probability of suffering certain types of attack, the potential impact, or any other business interests. For example, the protection for SQL injection attacks which is present in the OWASP Top 10 is generally considered with a greater weight than the protection for “Fake bots” attacks.

The following table shows a possible scenario, defining different weights for different categories. As previously stated, the weights shall be adapted to the specificity of the application to be protected.

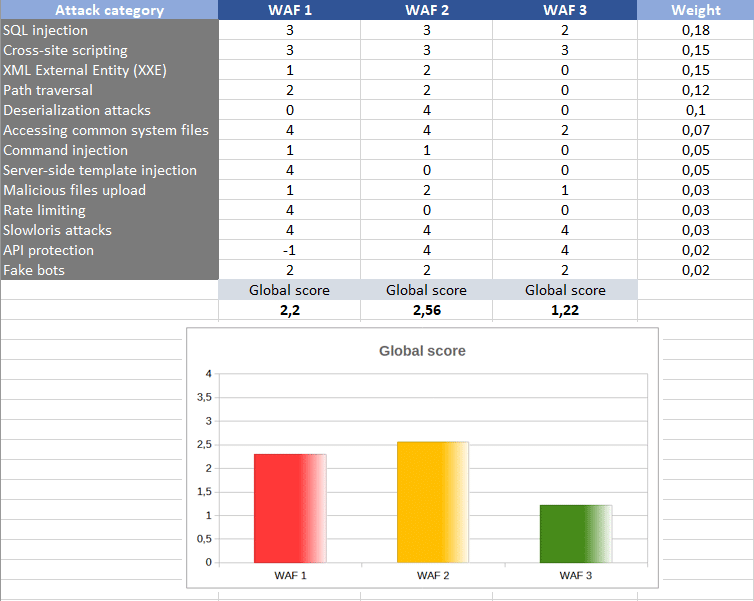

The next image shows an example of the global scoring results for three different WAF solutions, calculating the average between the different metrics and taking into account the chosen weights:

Conclusions

Choosing the defensive solution that best suits the needs of our web applications can be a complex decision. On the other hand, it is also interesting to evaluate to what extent a solution already integrated in the architecture or a new defensive product can be really effective and efficient against various types of advanced threats, detect potential weaknesses and try to improve their performance.

The evaluation of WAF solutions can provide valuable information to guide through the selection of the best WAF solution and configuration, or to help drive and measure performance of the WAF continuous improvement process.

Discover our work and cybersecurity services at www.tarlogic.com