The Way of the Hunter: Defining an ad hoc EDR evaluation methodology

Nowadays Threat Hunting is a very popular term in the infosec community. However, there is not a widely shared definition of that role. Discrepancies persist as everyone considers their own implementation as the right way to do it. Nevertheless, although the sector has yet to agree officially on what exactly entails to be a Threat Hunter, and which is their scope of action, there are some aspects in which consensus has been reached.

First, Threat Hunting has an implicit proactive nature that does not share with the traditional cybersecurity defence roles. Companies used to be restricted to take all the preventive and reactive actions available to protect their infrastructure and hope for the best: avoiding being compromised or at least being able to mitigate the damage when it eventually happened. The figure of the Threat Hunter stems from that need of taking proactive measures on the defensive side to keep up with the everlasting increase in quantity and sophistication of cybersecurity attacks that have been observed in the past years.

Its appearance changes the classic game of Threat Actor vs. Victim Company, as we now have a new player on the board: the Hunter; whose role requires having a deep offensive knowledge that is, for the first time, applied to be one step ahead of the Threat Actor. That knowledge is based on researching state-of-the-art attacks and dissecting how they work to extract the understanding of how the current adversaries think and which are their go-to techniques. With this new player on the board, the traditional victim gains its own offensive-driven guardian, a person who uses their offensive knowledge to detect and stop incidents before they become unmanageable.

Until now, we have established the base of what a Threat Hunter is to a level that most of the sector that provides a Threat Hunting service should agree on. Now we will go further into the specifics of what does our own concept of Threat Hunting encompass.

Although we think a Threat Hunter must have a transversal skillset that allows them to be self-sufficient in all of the areas in which an attacker could leave traces, our service does most of our hunting tasks taking the perspective of the endpoint, and our weapon of choice is the EDR/XDR.

Every day we assume the hypothesis that all our clients have been somehow compromised. To refute so, we query their infrastructure in the search for evidence with hundreds of queries that look for specific techniques that an adversary could have used to get through the client’s perimeter. Those queries are the result of continuous researching, and they are thought to find not only techniques that have been seen before in the wild used by APTs, but also novel techniques that could be used even if they haven’t been yet.

After we run our queries, we go through every match and discard false positives. To perform this analysis, we use the telemetry fed to the EDR/XDR by the endpoints. Although every EDR/XDR has its particularities, all of the ones that we homologate have the features to provide us with answers to the questions that we raise to evaluate the match. Finally, it’s only when we are sure that none of the results are related to malicious behaviour that we rule out the hypothesis of compromise.

Approaching the creation of an EDR evaluation methodology

When it comes to what makes an «EDR» to be an EDR, and which are its core features, we find yet again a diversity of criteria. In this case, it is not so much because of a lack of consensus in the infosec community itself, but because of vendors, who often try to tag their security solutions with the «EDR» label to enhance the appeal of their product, even if it doesn’t meet the most basic criteria of what an EDR should do.

We already stated that the EDR/XDR is our weapon of choice. As an independent provider, we strive to be technology agnostic and to blend in with heterogeneous environments as smoothly as possible. Therefore, is a must for us to know and keep track of the solutions that can support our Threat Hunting Model, the ones that are incompatible, and the evolution of both groups over time. For formalizing that process and make it scalable, we decided to create a custom EDR evaluation methodology.

State of the art of EDR evaluation

We approached the task from the perspective of our own broad experience in providing a high-quality Threat Hunting service. This has entailed working with several EDR solutions over the years, each one with its pros and cons. However, researching is also needed to establish a baseline of how the community has been evaluating EDRs so far, check if any of their approaches suit our Threat Hunting Model, and avoid duplication in case that there were already ongoing projects that met our requirements. During this investigation, we found that the current trend in EDR evaluation has roughly two branches.

Technical evaluation projects

This kind of evaluations are focused on the raw detection capabilities of the EDRs. Having a good range of detections is without a doubt a key feature of any solution that aims to call itself an EDR. However, we decided this kind of evaluations is enough and should be complemented with functional ones. That is because even if an EDR has the best detection rate, if it doesn’t retrieve telemetry that we can use to reject our compromise hypothesis, or if it doesn’t have the basic features to respond to an incident, the Threat Hunting service will be limited.

On the other side, an EDR with less detection range but more in tune with our Threat Hunting model could be enhanced by our extensive knowledge base and become a viable option.

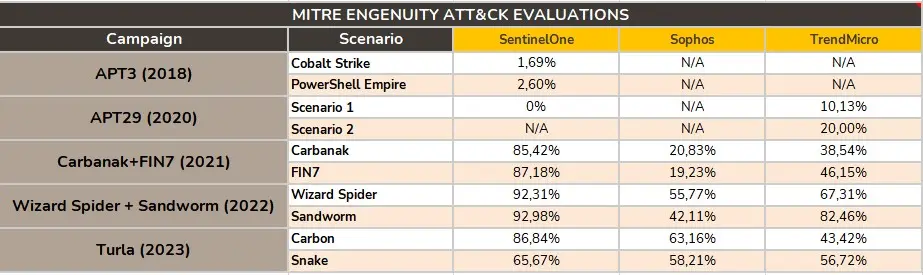

In this category, we would like to highlight the MITRE Engenuity ATT&CK Evaluations project, that provides a per year evaluation of detection capabilities of several EDRs solutions that apply to prove their efficacy. We used these evaluations as one scoring factor for or own EDR evaluation methodology.

Functional evaluation projects

These projects prioritize the evaluation of the quantity of the telemetry provided by EDR. In this category, we also found remarkable projects. We would like to make a special mention to the EDR Telemetry Project. This project was created to «compare and evaluate the telemetry potential from those tools while encouraging EDR vendors to be more transparent about the telemetry features they do provide to their users and customers».

They provided for us a very valuable checklist of the sources of telemetry that different EDR solutions make use of, and consequently the coverage that we can expect from them. Although this project is closer to the approach that we want to follow for our custom methodology, it doesn’t completely align with our needs. However, we ended up using it as a guide to decide which new EDR candidates will be worth evaluating with our custom methodology.

Although our EDR evaluation methodology also evaluates the telemetry provided by an EDR, we focus more on the concrete data recovered from each data source, making sure that the information gathered has a quality high enough for us to implement our hunting queries. Hence, we created a symbiotic relationship between our methodology and this project an avoided duplicating de great work that they are already doing.

Although any of the existing projects match our own very specific needs, they laid a strong foundation and gave us a starting point to work.

Once the research of the state-of-the-art phase ended, we started to define the custom parts of our EDR evaluation methodology. The definition of these entails most of the work of this project. It involves formalising the complete set of features that an EDR should have implemented to keep up with our quality standard for performing Threat Hunting.

The selection of those features is synthesized from our own technical expertise, acquired over years of providing a Threat Hunting service. Note that since our service is technology agnostic, and we have worked and evaluated several EDR technologies over the years, this feature checklist could be considered a compilation of all the remarkable aspects of the different EDR solutions that we have faced until this moment.

As one final note before diving into the sections in which we classified those features, it is important to clarify that this EDR evaluation methodology is intended to be applied on the premises that we have, for any EDR:

- Access to the EDR documentation.

- Access to a real environment with the EDR deployed so we can test and verify features.

- Access to the EDR’s support team, as sometimes the lack of documentation leaves uncertainty in the extent of reach of the EDR in some areas that are hard to reproduce in a real environment.

Without those three elements, we don’t think that the evaluation would be a representative reflection of the quality of the solution and how it would interact with our Threat Hunting model.

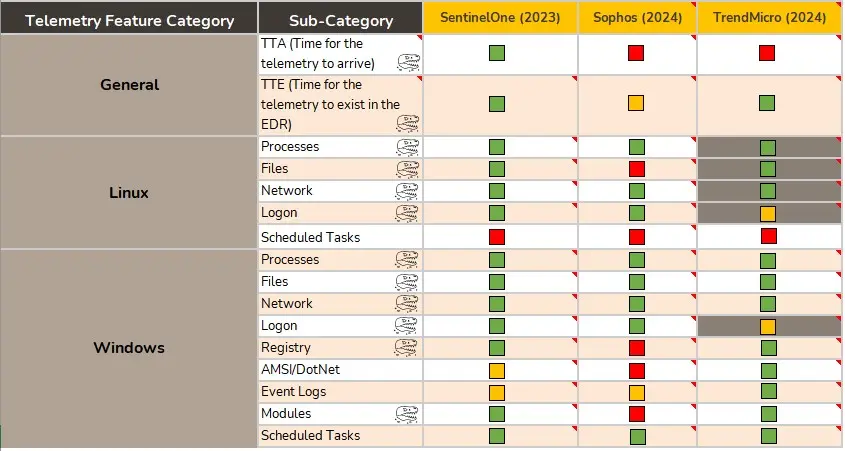

Telemetry

In this section we focus on evaluating if the telemetry available is enough in quantity and quality for performing our Threat Hunting model. That makes this section specially dependant on our approach of performing Threat Hunting. However, we try to be as verbose as possible in our evaluations, including comments that justify the scores that we give to each feature. In that sense, we believe that this telemetry section could be one of the most useful ones for other service providers, as we all analyse telemetry in one way or another.

Two of the features that we consider most important and worth explaining in this section are the TTA (Time for the Telemetry to Arrive) and TTL (Time that telemetry Lives, i.e. is available in the EDR).

TTA exists because we need to be sure that in the case that we find any trace of suspicious activity, we will be able to ask the telemetry in real time all the questions that we need to resolve the case. If the telemetry takes, let’s say, 15 minutes to arrive, then we will be at least 15 minutes at behind of the adversaries at any given moment. This could be seen as not much of a time lapse, but in a real time incident it could mean the difference between an early mitigation and a full compromise.

The TTL is also a very valuable feature for us. To detect suspicious behaviour, the first thing we got to know is how normal behaviour looks like in the environment. When we start working with a client, we can’t immediately learn the way their environment works, as that comes with time. So having old telemetry available can help us to make up for that lack of context. This feature also considers limitations in telemetry storage, such as:

- Some EDR technology will stop storing telemetry from an endpoint after certain storage threshold has been met. This kind of limitations could lead to potential Denial of Service attacks, where an adversary could generate telemetry related to non-suspicious activity up to the point that the EDR stops storing, and then perform their operations while we are blinded.

- Some EDR technology have telemetry models based on storing on the cloud only a partial amount of information and allowing the analyst to query the devices to obtain further context. In these cases, offline machines mean losing access to their telemetry.

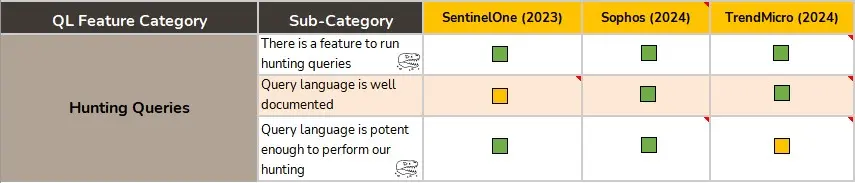

Query Language

We are always open to use new query languages from new EDR solutions. However, we have requirements that every solution and its query language must meet. First, the EDR must allow to query the telemetry. If it does, then its language must be potent enough for us to be able to create hunting rules and adapt all our threat knowledge to the new language. The lack of a proper implementation of some of the features in this section could be a constraint to perform investigations with that solution when signs of suspicious activity appear, as the questions that we launch against telemetry require a certain level of power.

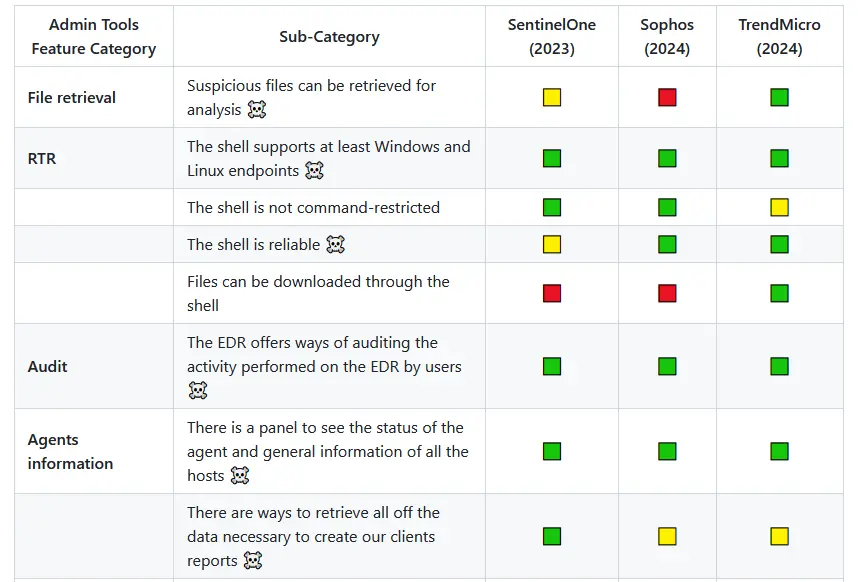

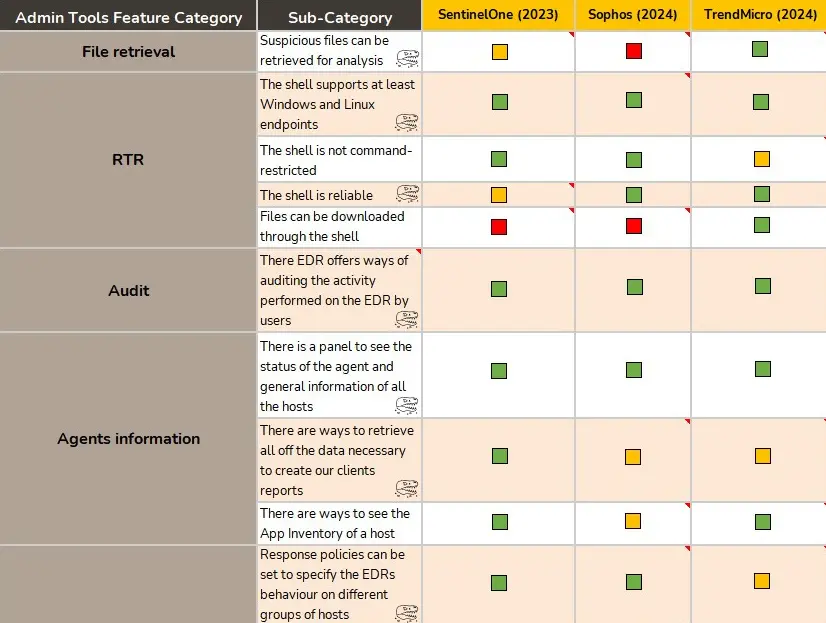

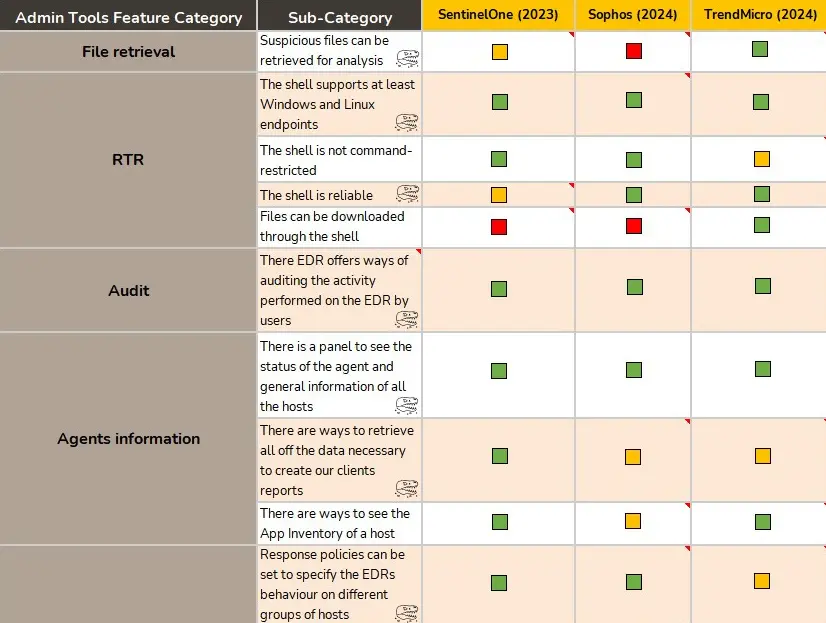

Administrative tools

This section encompasses all the administrative tools features that we need to have available in a EDR solution. From the implementation of audit logs to the security policies implementation and their flexibility, we evaluate several categories of features.

Features

In this section we have general features not so related to the administrative part of the EDR, but more specifically with the day-to-day use of the solution to perform our tasks. Hence, we will be finding features related to, for example, the implementation of artifact retrieval mechanisms, the flexibility to establish exclusions pr how response and mitigation actions can be applied, amongst many others.

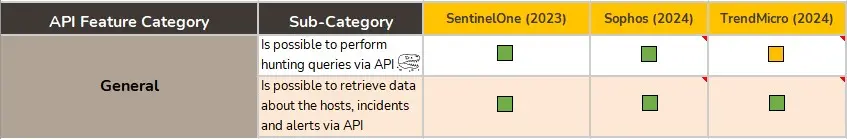

API

By the time that the evaluation arrives to this section, we already have an idea of the EDR’s performance, it’s strong points and its shortcomings. In this section we look for how much of its potential is available through the API, which will enable or constraint the sustainability of the service with a given EDR solution.

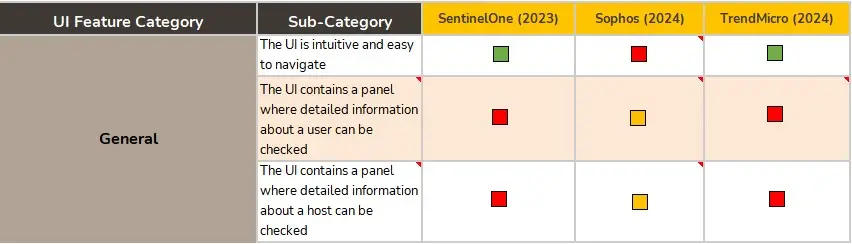

UI

While it is not the most important section, the UI section shouldn’t be underestimated. A Threat Hunter must be able to act and move smoothly through the EDR’s panels during an investigation. In that sense we are not talking about the Threat Hunter’s «comfort», but about how the UI can have a positive or negative influence when it comes to minimise the time of analysis.

MITRE Engenuity

We have integrated the MITRE Engenuity results into our scoring system. More specifically, we use the detections that every EDR was able to generate without making any modifications for each of MITRE’s exercises. This gives us an idea of the raw detection capability of the EDR when it comes to detect malicious behaviour, and how it has been evolving over the years.

Conclusions

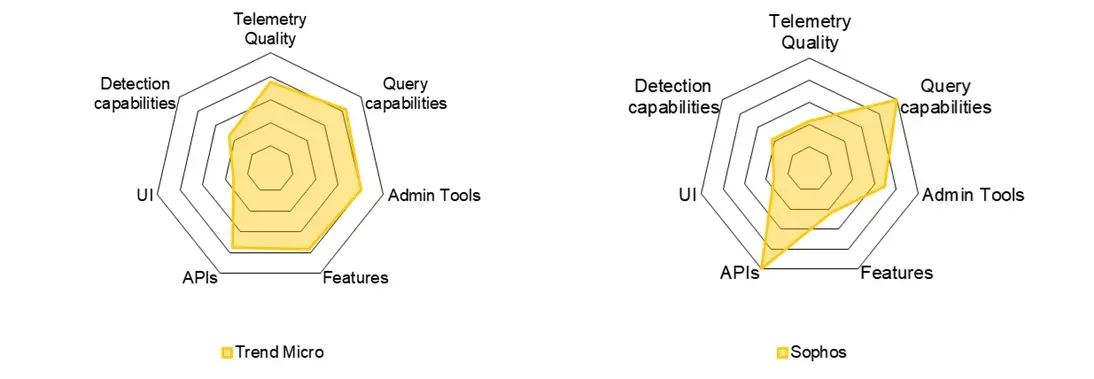

In this last section we have a summarized version of every other section for each EDR evaluation, together with a conclusion of how compatible that solution is with our model of Threat Hunting. Note that even although we have an empiric scoring system, the final conclusions are heavily influenced by the interpretation that the hunters make of that empiric scoring. In this section a merge the raw data that tells us the pros and cons of the solution evaluated, and the expert opinion of the team to generate a final conclusion that will conclude if the EDR in its current state is suitable to a greater or lesser extent for deploying a Threat Hunting service that hols our quality standards.

It is worth mentioning that this methodology is intended to be applied periodically on the solutions. By doing so we will know not only about their current state, but about how are they evolving and in which direction.

Challenges of EDR evaluation

Defining a NO-GO feature

We are aware that some of the features that we require in an EDR solution are more important than others. Therefore, we need to be able to differentiate those features that we consider that play a central role for deploying our Threat Hunting service. We call them NO-GO features.

The lack of these features in an EDR solution is considered as a significant lack of maturity in the solution, hence that solution will most probably not be homologated as sufficiently suitable for our Threat Hunting model.

Note that part of these evaluations’ objective is to share why we lean towards certain solutions instead of others in a deterministic and empirical way, but in no way do we seek to make our analysis a technology guillotine. We do not rule out using a solution that hasn’t been homologated, as long as we can be transparent about its limitations and they are acceptable to our customers.

Lack of documentation or cooperation by support

During some of the first evaluations carried out it has become apparent that some EDR solutions have a richer documentation than others. Likewise, dealing with the support teams of the products can be a rather tedious task sometimes.

The first levels of support often function as a kind of redirector to the available documentation, even if the ticket is precisely about the lack of publicly available documentation. Then, if you are lucky enough to get your ticket escalated, they are not always cooperative when it comes to give answers about the infrastructure or provide information that is not already published.

Nevertheless, we must also admit that during some of the evaluations, we have also encountered very cooperative support teams (although it is not the most common case).

Defining a scoring system

Our EDR evaluation methodology uses several inputs to arrive at the final conclusion of whether or not an EDR solution is homologable with our quality standard for a Threat Hunting service. Specifically, we consider:

- Grade and quality of implementation of the features that we have included as desirable or necessary (NO-GO Feature) in the methodology. We use a three-colour system to score this. If a feature is not implemented, we mark it with red. If a feature is partially implemented or has important caveats, we mark that feature with yellow. Finally, if a feature is implemented in a satisfactory manner, we mark that feature as green.

- As we previously stated, every EDR evaluated has to be tested for a sufficient period of time in a deployed environment in which we can verify its capabilities. During the hands-on part of the analysis of the solution, answering with the three-colour system to the question «Is this feature implemented?» is often not enough. To be able to add details about the score given we use notes to convey relevant information regarding that feature implementation, specifically when it has been scored as yellow.

- Once the evaluation is complete, we perform a synthesis for each section of features, in which we congregate the most important findings taking into account the three-color scoring and the notes, to highlight the best and worst of the EDR in that concrete section. This information is available in the «Conclusions» section.

- In this last step the team takes as input the grading and notes of the features, as well as their synthesized version created on step 3. With those inputs, the Threat Hunting team can properly discuss the evaluation results to reach a group consensus about what will be considered the final conclusion (i.e. the answer to the question «Is this EDR compatible with our quality standard to deploy our Threat Hunting service without constraints?»).

Sharing the product of our project

As discussed at the beginning of this article, how to perform Threat Hunting is still subject to debate. However, we firmly believe that our model deploys one of the most complete approaches in the sector, trying to better ourselves whenever is possible and prioritizing providing value through all of our projects. It is for that reason that we have decided to share with you here the implementation of our EDR evaluation methodology with a sample of results to ease its interpretation.

By sharing this methodology and some of its results we not only pretend to illustrate this article, but also to demonstrate the great importance attached to selecting a good technology and how this decision may severely condition the Threat Hunting service that will use it.

We hope that this article will serve as a showcase of how we do things and the importance we attach to a serious Threat Hunting service. We would also like to encourage other researchers in the community to exchange ideas and experiences, as well as to give us feedback that will allow us to further improve the project.

Happy Hunting!